Model | MPT

- Related Project: private

- Category: Paper Review

- Date: 2023-08-12

Introducing MPT-7B: A New Standard for Open-Source, Commercially Usable LLMs

- url: https://www.mosaicml.com/blog/mpt-7b

- githuib: https://github.com/mosaicml/TextGenerationLLM-foundry

TL;DR

- MPT 시리즈 공개: MPT-7B 모델을 포함한 여러가지 파인튜닝 버전 공개

- 상업적 사용 가능: LLaMA 시리즈와 차별화된 상업적 사용 라이선스 제공

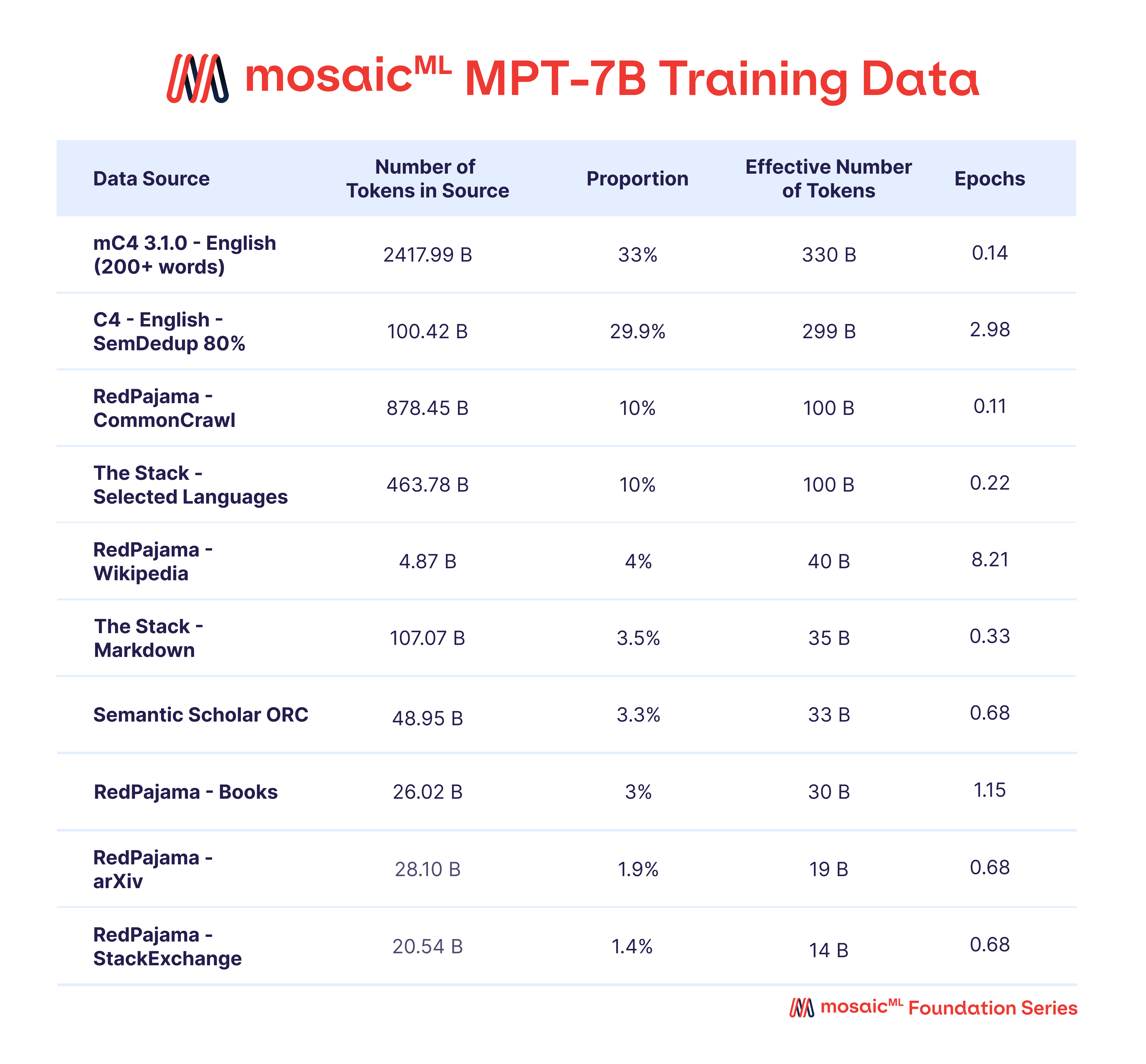

- 대규모 training dataset와 최적화: 1조 토큰 훈련, FlashAttention 및 ALiBi 적용

Introducing MPT-7B, the first entry in our MosaicML Foundation Series. MPT-7B is a transformer trained from scratch on 1T tokens of text and code. It is open source, available for commercial use, and matches the quality of LLaMA-7B. MPT-7B was trained on the MosaicML platform in 9.5 days with zero human intervention at a cost of ~$200k.

-

Large Language Models and the Open-Source Community

Large language models (LLMs) are changing the world, but for those outside well-resourced industry labs, it can be extremely difficult to train and deploy these models. This has led to a flurry of activity centered on open-source LLMs, such as the LLaMA series from Meta, the Pythia series from EleutherAI, the StableLM series from StabilityAI, and the OpenLLaMA model from Berkeley AI Research.

Today, we at MosaicML are releasing a new model series called MPT (MosaicML Pretrained Transformer) to address the limitations of the above models and finally provide a commercially-usable, open-source model that matches (and - in many ways - surpasses) LLaMA-7B. Now you can train, finetune, and deploy your own private MPT models, either starting from one of our checkpoints or training from scratch. For inspiration, we are also releasing three finetuned models in addition to the base MPT-7B: MPT-7B-Instruct, MPT-7B-Chat, and MPT-7B-StoryWriter-65k+, the last of which uses a context length of 65k tokens!

- LLaMA-7B와 어느 면에서는 뛰어넘는 상업적으로 활용 가능한 오픈 소스 모델인 MPT (MosaicML pre-trained 트랜스포머) 시리즈를 공개

- 체크포인트 중 하나에서 시작하거나 처음부터 훈련을 진행하여 고유한 MPT 모델을 훈련하고 세밀하게 조정하며 배포할 수 있으며, MPT-7B 기본 모델 외에도 MPT-7B-Instruct, MPT-7B-Chat, 그리고 context length가 65,000 토큰인 MPT-7B-StoryWriter-65k+와 같은 세 가지 세밀하게 조정된 모델도 공개

- MPT Model Series Features

Our MPT model series is:

- Licensed for commercial use (unlike LLaMA).

- Trained on a large amount of data (1T tokens like LLaMA vs. 300B for Pythia, 300B for OpenLLaMA, and 800B for StableLM).

- Prepared to handle extremely long inputs thanks to ALiBi (we trained on up to 65k inputs and can handle up to 84k vs. 2k-4k for other open source models).

- Optimized for fast training and inference (via FlashAttention and FasterTransformer).

- Equipped with highly efficient open-source training code.

- 상업적 이용 허가 (LLaMA1과는 다름).

- 대량의 데이터로 훈련됨 (LLaMA와 같이 1조 토큰 대비 Pythia는 3000억, OpenLLaMA는 3000억, 그리고 StableLM은 8000억).

- ALiBi를 통해 긴 입력 처리 가능 (65,000까지의 입력에 대해 훈련되었으며 84,000까지 처리 가능, 다른 오픈 소스 모델은 2,000-4,000).

- 빠른 훈련과 인퍼런스를 위해 최적화됨 (FlashAttention과 FasterTransformer를 통해)

- 효율적인 오픈 소스 훈련 코드를 활용

MPT를 다양한 벤치마크에서 엄격하게 평가했으며, MPT는 LLaMA-7B가 설정한 높은 품질 기준을 충족하였음.

We rigorously evaluated MPT on a range of benchmarks, and MPT met the high quality bar set by LLaMA-7B.

-

Introducing MPT Model Variants We trained MPT-7B with ZERO human intervention from start to finish: over 9.5 days on 440 GPUs, the MosaicML platform detected and addressed 4 hardware failures and resumed the training run automatically, and - due to architecture and optimization improvements we made - there were no catastrophic loss spikes. Check out our empty training logbook for MPT-7B!

MPT models are GPT-style decoder-only transformers with several improvements: performance-optimized layer implementations, architecture changes that provide greater training stability, and the elimination of context length limits by replacing positional embeddings with ALiBi. Thanks to these modifications, customers can train MPT models with efficiency (40-60% MFU) without diverging from loss spikes and can serve MPT models with both standard HuggingFace pipelines and FasterTransformer.

Today, we are releasing the base MPT model and three other finetuned variants that demonstrate the many ways of building on this base model:

- MPT-7B Base:

MPT-7B Base is a decoder-style transformer with 6.7B parameters. It was trained on 1T tokens of text and code that was curated by MosaicML’s data team. This base model includes FlashAttention for fast training and inference and ALiBi for fine-tuning and extrapolation to long context lengths.

- 빠른 훈련과 인퍼런스를 위해 FlashAttention을 포함하고 있으며, MPT-7B를 fine-tuning하고 긴 context length로의 인퍼런스를 위해 ALiBi를 사용

- MPT-7B Base

- MPT-7B matches the quality of LLaMA-7B. It is available for commmercial use and features:

- 7B parameters

- 1T pretraining tokens

- ALG BiLinear (ALiBi) for extrapolation beyond context lengths of 2-4k tokens

- FlashAttention for efficient training and inference

- Cost: approximately $200k for 9.5 days of training

- MPT-7B-StoryWriter-65k+:

MPT-7B-StoryWriter-65k+ is a model designed to read and write stories with super long context lengths. It was built by fine-tuning MPT-7B with a context length of 65k tokens on a filtered fiction subset of the books3 dataset. At inference time, thanks to ALiBi, MPT-7B-StoryWriter-65k+ can extrapolate even beyond 65k tokens, and we have demonstrated generations as long as 84k tokens on a single node of A100-80GB GPUs.

- MPT-7B-StoryWriter-65k+는 긴 context length로 이야기를 읽고 쓰는 모델

- MPT-7B를 65,000 토큰의 context length로 fine-tuning하여 books3 데이터셋의 소설 하위 집합에서 훈련

- 인퍼런스 시에는 ALiBi의 덕분에 MPT-7B-StoryWriter-65k+는 65,000 토큰을 넘어서 인퍼런스할 수 있으며, A100-80GB GPU의 단일 노드에서 최대 84,000 토큰까지 생성 가능한 성과를 보임

- License: Apache-2.0

- MPT-7B-StoryWriter-65k+

- MPT-7B-StoryWriter-65k+ is a model for reading and writing stories. It was finetuned on a fiction subset of books3. It can generate stories longer than the training context with ALiBi.

- 7B parameters

- 65k pretraining context tokens

- Cost: approximately $4k for fine-tuning

- MPT-7B-Instruct:

MPT-7B-Instruct is a model for short-form instruction following. Built by fine-tuning MPT-7B on a dataset we also release, derived from Databricks Dolly-15k and Anthropic’s Helpful and Harmless datasets.

- MPT-7B를 Databricks Dolly-15k 및 Anthropic의 Helpful and Harmless 데이터셋에서 파생된 데이터셋으로 fine-tuning하여 구축

- License: CC-By-SA-3.0

- MPT-7B-Instruct

- MPT-7B-Instruct is a model for short-form instruction following. It was finetuned with 15k tokens of text derived from Dolly and Helpful and Harmful. It can be fine-tuned for various tasks.

- 7B parameters

- 15k instruction tokens

- Cost: approximately $4k for fine-tuning

- MPT-7B-Chat:

MPT-7B-Chat is a chatbot-like model for dialogue generation. Built by fine-tuning MPT-7B on the ShareGPT-Vicuna, HC3, Alpaca, Helpful and Harmless, and Evol-Instruct datasets.

- MPT-7B-Chat은 대화 생성을 위한 챗봇 스타일의 모델로, MPT-7B를 ShareGPT-Vicuna, HC3, Alpaca, Helpful and Harmless, 그리고 Evol-Instruct 데이터셋에서 fine-tuning하여 구축함.

- MPT-7B-Chat is a chatbot-like model for dialogue generation. It was finetuned on ShareGPT and other datasets.

- 7B parameters

- 5+3.6k conversation tokens

- Cost: approximately $4k for fine-tuning