LongRoPE

- Related Project: Private

- Project Status: 1 Alpha

- Date: 2024-02-22

LongRoPE: Extending LLM Context Window Beyond 2 Million Tokens

- url: https://arxiv.org/abs/2402.13753

- pdf: https://arxiv.org/pdf/2402.13753

- abstract: Large context window is a desirable feature in large language models (LLMs). However, due to high fine-tuning costs, scarcity of long texts, and catastrophic values introduced by new token positions, current extended context windows are limited to around 128k tokens. This paper introduces LongRoPE that, for the first time, extends the context window of pre-trained LLMs to an impressive 2048k tokens, with up to only 1k fine-tuning steps at within 256k training lengths, while maintaining performance at the original short context window. This is achieved by three key innovations: (i) we identify and exploit two forms of non-uniformities in positional interpolation through an efficient search, providing a better initialization for fine-tuning and enabling an 8x extension in non-fine-tuning scenarios; (ii) we introduce a progressive extension strategy that first fine-tunes a 256k length LLM and then conducts a second positional interpolation on the fine-tuned extended LLM to achieve a 2048k context window; (iii) we readjust LongRoPE on 8k length to recover the short context window performance. Extensive experiments on LLaMA2 and Mistral across various tasks demonstrate the effectiveness of our method. Models extended via LongRoPE retain the original architecture with minor modifications to the positional embedding, and can reuse most pre-existing optimizations.

Contents

- LongRoPE: Extending LLM Context Window Beyond 2 Million Tokens

TL;DR

LongRoPE의 도입으로 LLM의 context window 확장에 관한 연구

- 대규모 언어모델(LLM)의 context window을 효과적으로 확장하는 방법 제시

- Rotary Positional Embedding (RoPE)의 비균일 확장을 통한 새로운 방법 도입

- 비균일 위치 보간법을 이용한 LongRoPE 방법을 소개하고 그 효과성 검증

1. 서론

최근 LLM들은 다양한 자연 언어 처리 작업에서 향상된 성능을 보여주고 있지만, 기존 모델들은 context window(context window)의 한계로 인해 성능이 제한되는 문제가 있습니다. 특히 많은 예시들을 포함하는 인-컨텍스트 학습이나 LLM 에이전트 구현 시 이런 제약은 더욱 두드러집니다. 이에 본 논문에서는 RoPE의 확장을 통해 LLM의 context window을 2백만 토큰 이상으로 확장할 수 있는 새로운 방법인 LongRoPE을 제안합니다.

2. 배경 및 관련 연구

기존의 연구에서는 RoPE를 활용하여 위치 정보를 효율적으로 인코딩하는 방법이 다양하게 제안되었습니다. RoPE는 각 토큰 위치에 대한 rotation 각도를 이용하여 위치 정보를 인코딩하는 방식으로, 각 위치 인덱스 \(n\)에 대하여 다음과 같이 표현됩니다.

\[\left[ \cos(n \theta_0), \sin(n \theta_0), \cos(n \theta_1), \ldots, \cos(n \theta_{d/2-1}), \sin(n \theta_{d/2-1}) \right]\]이런 방식은 특히 LLM의 context window을 확장하는 데 있어 중요한 역할을 합니다. 그러나 기존 방법들은 일정한 확장 비율 \(s\)에 따라 모든 차원에 대해 동일하게 rotation frequencies \(\theta_i\)를 조정하는 방식을 취하고 있습니다. 이는 위치 정보의 ‘혼잡함’을 초래하여 모델이 인접 토큰을 구별하는 데 어려움을 겪게 합니다. 본 연구에서는 이런 문제를 해결하기 위해 다차원의 비균일성을 고려한 새로운 위치 보간 방법을 제안합니다.

3. LongRoPE 방법

3.1 비균일 위치 보간

LongRoPE는 위치 보간에 있어서 두 가지 비균일성을 고려합니다. RoPE 차원의 비균일성과 토큰 위치의 비균일성입니다. 이를 통해 각 RoPE 차원에 대해 효과적인 재조정 인자를 도출하고, 이를 바탕으로 LLM의 context window을 대폭 확장합니다. 이 과정에서 진화적 탐색 알고리즘을 사용하여 탐색 공간의 효율성을 높입니다.

3.2 context window 확장

LongRoPE는 초기에 256k 길이로 모델을 파인튜닝한 후, 비균일 위치 보간을 통해 2048k까지 확장합니다. 이는 기존에 필요했던 대규모 파인튜닝 없이도 실현 가능합니다. 또한, 원래의 짧은 context window에서의 성능 저하를 방지하기 위해 추가적인 조정을 통해 RoPE 재조정 인자를 최적화합니다.

4. 실험 및 평가

LongRoPE을 다양한 LLM과 context length의 작업에 적용하여 그 효과성을 검증했습니다. 특히 256k 이상의 맥락에서 높은 퍼플렉서티를 유지하며, 표준 벤치마크 내에서도 경쟁력 있는 성능을 보여주었습니다. 이는 LongRoPE이 context window을 효과적으로 확장할 수 있음을 입증합니다.

5. 결론

LongRoPE은 LLM의 context window을 효과적으로 확장할 수 있는 새로운 방법을 제공합니다. 이는 비균일 위치 보간을 통해 위치 정보의 손실을 최소화하고, 파인튜닝 없이도 context window을 대폭 확장할 수 있는 가능성을 확인합니다.

1 Introduction

Large Language Models (LLMs), despite remarkable suc- cess on various tasks (OpenAI et al., 2023; Touvron et al., 2023), often suffer from limited context window size, e.g., LLaMA2’s 4096 token limit (Touvron et al., 2023). Beyond the context window, LLM’s performance declines due to the additional positions that the model has not been trained on. This poses challenges in important scenarios like in-context learning with numerous examples (Huang et al., 2023) and LLM agents (Park et al., 2023; Madaan et al., 2023).

Figure 1. Books3 perplexity comparison between LongRoPE and state-of-the-art long-context LLMs using other extension methods.

Recent works show that a pre-trained LLM context window can be extended to around 128k by fine-tuning on longer texts (Chen et al., 2023b;a; Peng et al., 2023; Zhang et al., 2024; Liu et al., 2023). There are three major obstacles to further extend the context window. First, untrained new position indices introduce many catastrophic values, leading to out-of-distribution issues and making fine-tuning diffi- cult to converge (Chen et al., 2023a). This is particularly challenging when an extension from 4k to >1000k intro- duces more than 90% new positions. Second, fine-tuning usually requires texts of corresponding lengths. However, long texts in current datasets, especially those exceeding 1000k, are limited. Moreover, training on extra-long texts is computationally expensive, requiring prohibitively ex- tensive training hours and GPU resources. Third, when extending to extremely long context windows, the attention becomes dispersed as it’s spread thinly across numerous to- ken positions, degrading performance on the original short context (Chen et al., 2023a).

One approach to mitigate the first challenge is to interpolate RoPE positional embedding (Su et al., 2021; Chen et al., 2023a), which downscales new position indices to the pretrained range, as shown in Fig.2. Position Interpolation (PI) (Chen et al., 2023a) linearly interpolates RoPE’s ro- tary angles by the extension ratio. NTK (LocalLLaMA, 2023b;a) advocates unequal interpolation and extrapolation across RoPE dimensions. YaRN (Peng et al., 2023) catego- rizes RoPE dimensions into three frequency-based groups and applies extrapolation, NTK, and linear interpolations, respectively. However, positional embedding exhibits com- plex non-uniform information entropy in the Transformer architecture. Such subtle non-uniformity is not effectively leveraged by existing approaches, leading to information loss and hence limiting the context window size.

Section 2 reveals two key findings empirically: (1) Effective positional interpolation should consider two forms of non- uniformities: varying RoPE dimensions and token positions. Lower RoPE dimensions and initial starting token positions benefit from less interpolation, but the optimal solutions de- pend on the target extended length. (2) By considering these non-uniformities into positional interpolation, we can effec- tively retain information in the original RoPE, particularly key dimensions and token positions. This minimizes the loss caused by positional interpolation, and thus provides better initialization for fine-tuning. Moreover, it allows an 8× extension in non-fine-tuning scenarios.

Motivated by the findings, we introduce LongRoPE, an effective method that extends the LLM context window be- yond 2 million tokens. LongRoPE is based on three key in- novations. First, LongRoPE fully exploits multidimensional non-uniformities in positional interpolation. It identifies effective rescale factors for RoPE’s rotation angles for each RoPE dimension, based on token positions. As the search space that identifies rescale factors expands exponentially with the target extension ratio, LongRoPE introduces an evolutionary search algorithm with two optimization tech- niques to boost search efficiency. Fig. 2 shows an example of the searched rescaled RoPE.

Then, LongRoPE leverages an efficient, progressive exten- sion strategy to achieve a 2048k context window without the need of direct fine-tuning on texts with extremely long lengths, which are scarce and hardly available. The strategy begins by searching for a 256k length on the pre-trained LLM and fine-tuning it under this length. Then, as our non- uniform positional interpolation allows for an 8× extension in non-fine-tuning settings, we conduct a second search for new RoPE rescale factors on the fine-tuned extended LLM. This ultimately achieves the 2048k context window for LLaMA2 and Mistral (Jiang et al., 2023).

Finally, to mitigate performance degradation on the original (shorter) context window, LongRoPE continues to adjust the RoPE rescale factors on the extended LLM. Similar to scaling up from 256k to 2048k, we scale down to 4k and 8k context windows on the 256k fine-tuned LLM using our

search algorithm to encourage less positional interpolation. During inference, if the sequence length is less than 8k, we update RoPE with the searched rescale factors.

Extensive experiments across different LLMs and various long-context tasks demonstrate the effectiveness of our method. We show that LongRoPE is highly effective in maintaining low perplexity from 4k to 2048k evaluation length, achieving over 90% passkey retrieval accuracy, and delivering comparable accuracy on standard benchmarks designed within the 4096 context window. LongRoPE can be applied to any LLMs based on RoPE embedding. We will release our code and LongRoPE-2048k models.

2 Non-uniformity in Positional Interpolation

2.1. Preliminary

Transformer Models and Positional Information:

Transformer models require explicit positional information, often in the form of position embedding, to represent the order of input tokens. Our work focuses on the RoPE (Rotary Position Embedding) as detailed by Su et al., 2021, which is widely used in recent large language models (LLMs). For a token at position index \(n\), its corresponding RoPE encoding can be simplified as follows:

\[[\cos(n\theta_0), \sin(n\theta_0), \cos(n\theta_1), \ldots, \cos(n\theta_{d/2-1}), \sin(n\theta_{d/2-1})]\](1) where \(d\) is the embedding dimension, \(n\theta_i\) is the rotary angle of the token at position \(n\), \(\theta_i = \theta \frac{-2i}{d}\) represents the rotation frequencies. In RoPE, the default base value of \(\theta\) is 10000.

Context Window Extension Ratio \(s\) and Positional Interpolation:

We define \(s\) as the ratio of extended context length \(L'\) to the original length \(L\): \(s = \frac{L'}{L}\). To extend the context window from \(L\) to \(L'\), current positional interpolation methods suggest downscaling rotation frequencies \(\theta_i\) based on the extension ratio \(s\). Let \(\beta = \frac{\theta}{2d}\), and \(\lambda\) denote the actual rescale factor related to \(s\), we unify these positional interpolation methods as follows:

Linear Positional Interpolation (PI):

PI (Chen et al., 2023a) suggests linear interpolation of position indices within the pre-trained length limit. For a target extension ratio \(s\), the rotation angles of all positions are linearly reduced by \(\lambda = s\) across all RoPE dimensions. However, this makes the position information very “crowded”, hindering the model’s ability to distinguish closely positioned tokens. Therefore, PI tends to underperform at high extension ratios.

NTK-based Interpolation and Extrapolation:

(LocalLLaMA, 2023b;a) look at RoPE from an information encoding perspective and apply the Neural Tangent Kernel (NTK) theory (Jacot et al., 2018; Tancik et al., 2020). To mitigate the crowded-positions issue in PI, they suggest to distribute interpolation pressure across RoPE dimensions. It scales lower (high frequency) dimensions less and higher (low frequency) dimensions more, resulting in both positional interpolation and extrapolation, where \(\lambda = s_i\). The improved dynamic NTK (LocalLLaMA, 2023a) adjusts the extension ratio at each position based on the current sequence length. Unlike PI, which necessitates fine-tuning, NTK-aware methods can extend context windows in non-fine-tuning scenarios, but usually with a maximum extension ratio of 4×.

YaRN (Peng et al., 2023):

Introduces a significant improvement to positional interpolation performance. It divides RoPE dimensions into three frequency-based groups, each with a different interpolation strategy. High frequency dimensions undergo extrapolation (\(\lambda=1\)), while low frequency dimensions use linear interpolation (PI). The RoPE dimensions that fall in-between employs the NTK. The key of YaRN lies in its grouping of RoPE dimensions, which currently depends on human-led empirical experiments. This may result in sub-optimal performance for new LLMs.

2.2. Study on Non-uniform Positional Interpolation

Inspired by NTK and YaRN, we notice their gains from non-linearity, specifically in considering different frequencies across RoPE dimensions for specialized interpolation and extrapolation. However, current non-linearities heavily rely on human-designed rules. This naturally raises two questions:

- Is the current positional interpolation optimal?

- Are there unexplored non-linearities?

Table 3. Proof-pile perplexity of the extended LLaMA2-7B with a 64k context window in non-fine-tuned and fine-tuned settings.

To answer these questions, we use evolution search (see Sec. 3) to discover better non-uniform positional interpolations for LLaMA2-7B. The search is guided by perplexity, using 5 random samples from PG19 (Rae et al., 2019) validation set. Through our empirical analysis, we reveal the following key findings.

Finding 1: RoPE dimensions exhibit substantial non-uniformities, which are not effectively handled by current positional interpolation methods.

We search the optimal $\lambda$ for each RoPE dimension in Eq. 2. Table 1 compares the perplexity of LLaMA2-7B under different methods on PG19 and Proof-pile (Azerbayev et al., 2022) test sets, without fine-tuning. Our searched solution shows significant improvements, suggesting that current linear (PI) and non-uniform (Dynamic-NTK and YaRN) interpolations are sub-optimal. Notably, YaRN underperforms than PI and NTK on PG19, as it doesn’t reach the target context window length for non-fine-tuned LLM. For example, YaRN’s perplexity spikes after 7k in an 8k context size.

Through our search, the rescaled factors $\lambda$ in Eq. 2 become non-uniform, differing from the fixed scale $s$ in PI, NTK’s formula calculation, and YaRN’s group-wise calculation. These non-uniform factors significantly improve LLaMA2’s language modeling performance (i.e., perplexity) for 8k and 16k context windows without fine-tuning. This is because the resulting positional embedding effectively preserves the original RoPE, especially key dimensions, thus reducing LLM’s difficulty in distinguishing close token positions.

Finding 2: RoPE for the initial tokens in the input sequence should be extrapolated with less interpolation.

For the initial \(\hat{n}\) tokens in input sequences, we hypothesize that their RoPE should do less interpolation. This is because they receive large attention scores, making them crucial to attention layers, as observed in Streaming LLM (Xiao et al., 2023) and LM-Infinite (Han et al., 2023). To verify this, we extend the context window to 8k and 16k using PI and NTK, keeping the first \(\hat{n}\) (0,2, …, 256) tokens without interpolation. When \(\hat{n}=0\), it reverts to the original PI and NTK. Table 2 highlights two key observations:

- Retaining the starting tokens without position interpolation indeed improves the performance.

- The optimal number of starting tokens, \(\hat{n}\), depends on the target extension length.

Finding 3: Non-uniform positional interpolation effectively extends LLM context window in both fine-tuning and non-fine-tuning settings.

While we’ve shown that our searched non-uniform position interpolation significantly improves the extension performance at 8k and 16k without fine-tuning, longer extensions require fine-tuning. As such, we fine-tune LLaMA2-7B with our searched RoPE for a 64k context window size (see Appendix for settings). As Table 3 shows, our method significantly outperforms PI and YaRN, both before and after fine-tuning LLaMA2-7B. This is due to our effective use of non-uniform positional interpolation, minimizing information loss and providing a better initialization for fine-tuning.

Summary. Our study uncovers two non-uniformities: varying RoPE dimensions and token positions. Utilizing these non-uniformities effectively in positional interpolation greatly improves LLM context extension performance.

3. LongRoPE

Motivated by the findings, we present LongRoPE, which first introduces an efficient search algorithm to fully exploit the two non-uniformities, and then uses it to extend LLM context window beyond 2 million tokens.

3.1. Problem Formulation

The two non-uniformities can lead to a vast solution space and introduce complexities in optimization. To address it, we frame the multidimensional non-uniform position interpolation optimization problem as a search problem.

Table 4. Search space for RoPE rescale factors. Tuples of three values represent the lowest value, highest, and step size. Non-uniformity Notation RoPE dimension

where we introduce a set of rescale factors, $I(\hat{\lambda}_i, \hat{n})$, to cover the two forms of non-uniformities. $\hat{\lambda}_i$ and $\hat{n}$ denote the non-uniformity of RoPE dimensions and token positions, respectively. Specifically, we use $I(\hat{\lambda}_i, \hat{n})$ to rescale the rotation angle for the ith RoPE dimension, where $\hat{\lambda}_i$ is the rescale factor and $\hat{n}$ is the token position threshold. For initial $\hat{n}-1$ token positions, the rescale factor $\hat{\lambda}_i$ will not take effect, and the original RoPE rotary angle $n \beta_i$ is used. For tokens at positions $n \geq \hat{n}$, the rescale factor is applied.

Given a target context window size of $L’$, our objective is to find the optimal rescale factors $(I(\hat{\lambda}_0, \hat{n}), I(\hat{\lambda}_1, \hat{n}), … I(\hat{\lambda}_i, \hat{n}), …)$ from the 1st to the $d$-th RoPE dimension. As a result, the target LLM, with the rescaled RoPE, can achieve a minimum next token prediction loss, $L$ (i.e., the perplexity), for input samples $X$ with a token length of $L’$.

3.2. Searching the Non-uniform Position Interpolation

To solve the problem in Eq. 3, we now introduce our simple yet highly effective method, which searches for the optimal RoPE rescale factors to fully exploit the multidimensional non-uniformities in position embedding.

Search space. We design a large search space to include the two non-uniformities. Table 4 illustrates the search space. Specifically, we allow the search of a specialized rescale factor for each dimension in RoPE embedding. To simplify search space design, we search $\lambda_i$ and $\hat{n}$ instead of searching for $I(\hat{\lambda}_i, \hat{n})$, where $\hat{\lambda}_i = 1/\lambda_i$. As shown in Table 4, $\lambda_i$ is allowed to search from a minimum value of 1.0 (i.e., direct extrapolation) to a maximum value of $s \times 1.25$ (i.e., larger interpolation than PI) with a step size of 0.01, where $s$ is the target context window extension ratio.

$\hat{n}$ controls the number of initial token positions that are retained without position interpolation (i.e., use the original RoPE embedding). Empirically, we allow $\hat{n}$ to search from ${0, 1, 2, 4, 8, 12, 16, 20, 24, 28, 32, 64, 128, 256}$. When $\hat{n} = 0$, all token positions use the searched rescale factors.

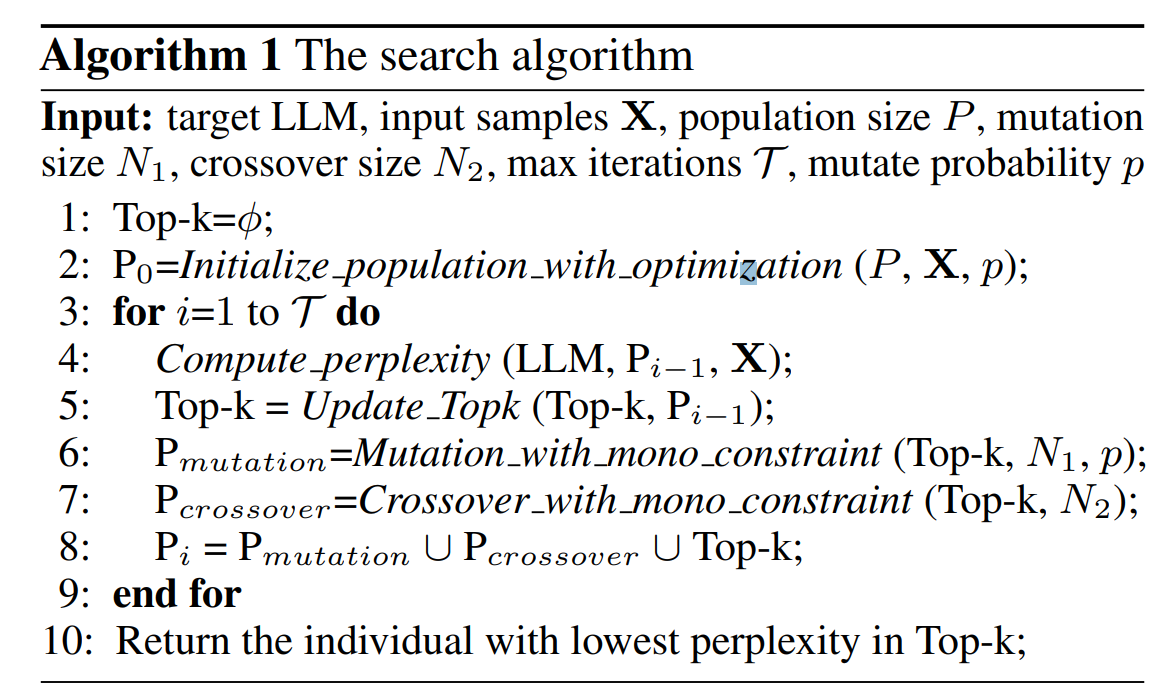

Evolution-based search. Our search space in Table 4 spans numerous positional interpolation solutions, posing a significant challenge for efficient exploration. For example, a $s = 4\times$ extension leads to $400128/2 \times 14=4\times10^{167}$ choices. With a larger extension ratio, the search space expands exponentially. To address this, we use evolution search (Guo et al., 2020) and introduce two optimization techniques to greatly boost search efficiency. Algorithm 1 illustrates the overall search procedure.

Optimized initial population generation. Instead of initializing a population of \(P\) rescale factors randomly, we add the three RoPE rescale factors corresponding to PI, NTK, and YaRN as individuals into the initial population. For the remaining \(P -3\) individuals, we randomly mutate the three rescale factors with a probability of \(p\).

Monotonically non-decreasing constraint. After generating the initial population, we compute LLM perplexity for each individual. Specifically, we apply the corresponding RoPE rescale factors to the target LLM and compute the perplexity of input \(X\). The top-k individuals become parents for evolution. However, the vast search space can cause naive mutation and crossover to explore poor solutions, leading to unnecessary perplexity computations. This is particularly inefficient when \(L'\) is large, given the time-consuming inference of each perplexity calculation.

To address this, we impose a non-decreasing monotonicity constraint on the sampled RoPE rescaled factors: \(\lambda_i \leq \lambda_{i+1}\). Only RoPE that satisfies this constraint is applied to LLM for perplexity evaluation, significantly reducing the search costs. Specifically, we require that \(\lambda_i\) increases monotonically with the RoPE dimension (i.e., \(i=0,...,63\)). This dimensional monotonicity is based on the NTK theory (Jacot et al., 2018; Tancik et al., 2020; LocalLLaMA, 2023b), suggesting that lower dimensions with higher frequency require less interpolation (i.e., a smaller \(\lambda_i\)), and higher dimensions with lower frequency can do more interpolation (i.e., a larger \(\lambda_i\)).

8× extension without fine-tuning. Our evolutionary search effectively identifies non-uniform RoPE rescale factors, preserving key dimensions and positions to minimize interpolation-induced information loss. As depicted in Fig.3, our method is able to extend LLaMA2’s context window from 4k to 32k without fine-tuning. In contrast, existing methods such as PI, and non-uniform NTK and YaRN cause perplexity to spike after 2× extension.

**Algorithm 1 **

Figure 3. LLaMA2-7B perplexity on PG19 and Proof-Pile after extension using different methods, measured without fine-tuning. By fully exploiting the non-uniformities, LongRoPE achieves an 8× extension without fine-tuning.

3.3. Extending LLM Context Window to 2048K

Progressive extension to 2048k. We now introduce our method to extend the context window of pre-trained LLMs from the traditional 4k to over 2048k. As demonstrated, our non-uniform positional interpolation can achieve 8× extension without fine-tuning. For larger extensions (i.e., 512×) is required, fine-tuning is necessary. One method is to search for RoPE rescaled factors under the target 2048k size and then fine-tune. However, this faces challenges due to the prohibitively expensive training resources. Moreover, based on our experiences, it’s challenging to well fine-tune the LLMs under a large extension ratio (see Appendix).

Fortunately, LongRoPE is effective for both the original and fine-tuned extended LLM. Therefore, we introduce an efficient, progressive method that achieves the target 2048k with just 1k fine-tuning steps at within 256k training length. ♢ Extending pre-trained LLM to 256k with LongRoPE search. Taking LLaMA2 as an example, we conduct search for target context window size of 128k and 256k. The ex- tension ratio at this stage is 32× and 64×, respectively. ♢ Fine-tuning to 256k. Then, we fine-tune the pre-trained LLM to achieve the context window size of 256k. Specif- ically, we first fine-tune LLaMA2 for 400 steps using the RoPE rescaled factors for 128k. Then, we replace the RoPE rescaled factors to 256k on the finished checkpoint and con- duct an additional 600 steps of fine-tuning. This method proves more efficient than directly fine-tuning to 256k. ♢ Extending fine-tuned extended LLM to 2048k with Lon- gRoPE search. Finally, we perform a secondary search on the fine-tuned 256k-length LLM. This ultimately results in an extremely large context window size of 2048k without further fine-tuning. The final extension ratio is 512×.

Shorter context window recovery. After extending to an extremely long 2048k context window, we notice a perfor- mance drop within the original context window. This is a known issue of positional interpolation (Chen et al., 2023a), as it forces position embedding in higher dimensions within the original context window to reside in a much narrower region, negatively affecting the language model’s perfor- mance. With a 512× extension ratio, positions within the original 4k context window become particularly crowded.

To mitigate this, we perform an extra evolution search on the extended LLM to adjust RoPE rescale factors for short context lengths (e.g., 4k and 8k). We reduce the maxi- mum allowed searched λ due to less positional interpolation required for shorter lengths. During inference, the LLM dy- namically adjusts the corresponding RoPE rescale factors.

4. Experiments

4.1. Setup

Evaluation Tasks and models. We apply LongRoPE on LLaMA2-7B and Mistral-7B, and evaluate the performance on three aspects: (1) perplexity of extended-context LLMs on long documents; (2) Passkey retrieval task that measures a model’s ability to retrieve a simple passkey from a sea of irrelevant text; and (3) Standard LLM benchmarks within a short 4096 context window size.

Fine-tuning. For LLaMA2, we use a learning rate of 2e-5 with linear decay and a global batch size of 32. We fine- tune for 400 steps on Redpajama (Computer, 2023) dataset, chunked into 128k segments bookended with the BOS and EOS tokens. Then, based on the finished checkpoint, we train an additional 600 steps to achieve 256k context window. The 128k context size is trained on 8 A100 GPUs with the distributed training system (Lin et al., 2023), while the 256k requires 16 A100 GPUs. In the case of Mistral, a constant learning rate of 1e-6 and a global batch size of 64 are used. For both 128k and 256k models, we follow the setting in YaRN (Peng et al., 2023), with 400 steps on the Together Computer’s Long-Data Collections (mis, 2024) using 16k sequence length. We use 4 A100 GPUs for training.

Search. For target window size within 256k, we use: P =64, N1=N2=16, p=0.3, T =40, and select top-32 for mutation/crossover in each iteration. Perplexity is calcu- lated using 5 random PG19 validation set samples, with a minimum length requirement of the target context length. For windows over 512k, we halve the population, mutation,

and crossover sizes. Perplexity is measured on 3 random samples from Pile-Books3 (Gao et al., 2020) validation set.

Baselines. To reach 2048k, we fine-tuned models with 128k and 256k context windows. This yields LongRoPE-2048k (ft=128k) and LongRoPE-2048k (ft=256k) for LLaMA2 and Mistral, respectively. We compare the four models with state-of-the-art context window extension baselines, specifically open-sourced LLMs fine-tuned after positional interpolation using PI, NTK and YaRN. This includes Together-32k (Together, 2023), Code LLaMA (Rozi`ere et al., 2023), LongLoRA-full-FT-100k (Chen et al., 2023b), YaRN-LLaMA and YaRN-Mistral (Peng et al., 2023).

4.2. Main Results

Long sequence language modeling within 256k. We begin by comparing with state-of-the-art extended LLMs within a 256k evaluation length. We use two datasets to demon- strate our generalizability: Proof-pile (Rae et al., 2019) and PG19 (Gao et al., 2020) test splits. We evaluate perplexity at various context lengths using sliding window of 256. For PG19, we use the whole test split of 100 documents. For Proof-pile, we follow YaRN (Peng et al., 2023) to randomly select 10 samples, each with at least 128k lengths.

Table 5 and Table 7 compare the perplexity of LLaMA2 and Mistral extended via different interpolation methods on Proof-pile and PG19, respectively. We highlight two key observations: (1) our extended models show an overall de- creasing perplexity trend from 4k to 256k evaluation lengths, proving their abilities to leverage longer context. (2) Even with a context window 16× longer, a condition typically challenging for maintaining performance at shorter lengths, our LongRoPE-2048k models outperform state-of-the-art baselines within 256k context length.

Long sequence language modeling beyond 2000k. To evaluate the effectiveness on extremely long documents, we use the Books3 (Gao et al., 2020) dataset. For evaluation efficiency, we randomly select 20 books, each exceeding 2048k in length, and use a sliding window of 256k.

As shown in Table 6, LongRoPE successfully extends LLaMA2-7B and Mistral-7B’s context window to 2048k, while also achieving perplexity comparable or superior to baselines within shorter lengths of 8k-128k. We also ob- serve notable performance differences between the 2048k LLaMA2 and Mistral. Mistral outperforms baselines at shorter lengths, but perplexity exceeds 7 beyond 256k. LLaMA2 performance aligns with expectations: the perplex- ity decreases gratefully with longer contexts, with marginal increases at 1024k and 2048k. Moreover, on LLaMA2, LongRoPE-2048k performs better at a fine-tuning length of 256k over 128k, due to the smaller secondary extension ratio (i.e., 8× vs. 16×). In contrast, Mistral performs better at fine-tuning window size of 128k. The main reason is that for Mistral’s 128k and 256k fine-tuning, we follow YaRN’s setting to use a 16k training length, which affects Mistral’s ability to further extend context window after fine-tuning.

Table 5. Proof-pile perplexity of models with various positional interpolation methods. ft: the context window size used in fine-tuning. Even with a context window 16× longer than current long-context models, our models also outperform them within 256k context length.

Table 6. Perplexity evaluation on Books3 dataset. Without additional fine-tuning, our LongRoPE-2048k models, with a training context window size of 128k and 256k, effectively scale to an extremely long context size of 2048k. 1k=1024 tokens.

Passkey retrieval. We now study the effective context window size in generation tasks. We follow a synthetic eval- uation task of passkey retrieval proposed by (Mohtashami & Jaggi, 2023). In this task, the model is asked to retrieve a random passkey (i.e., a five-digit number) hidden in long document. The prompt template is detailed in appendix. We perform 10 iterations of the passkey retrieval task with the passkey placed at a random location uniformly distributed across the evaluation context length.

Fig. 4 shows the retrieval accuracy comparison with base- lines. Existing models’ accuracy rapidly drops to 0 beyond 128k. In contrast, despite the very challenging task of retrieving a passkey from million-level tokens, our LongRoPE- LLaMA2-2048k (ft=256k) manage to maintain a high re- trieval accuracy (≥90%) from 4k to 2048k. LongRoPE- Mistral-2048k (ft=128k) keeps 100% accuracy up to 1800k, dropping to 60% at 2048k, aligning with expectations from Table 6, where the perplexity slightly increases at 2048k.

Figure 4. Passkey retrieval accuracy of long-context LLMs. It showcases the remarkable ability of our models to accurately re- trieve a passkey from a vast pool of million-level tokens.

Standard benchmarks within original context window. We evaluate LongRoPE-2048k models on the original context window using Hugging Face Open LLM Leader- board (Face, 2024) in zero-shot and few-shot settings. We use 25-shot ARC-Challenge (Clark et al., 2018). 10-shot HellaSwag (Zellers et al., 2019), 5-shot MMLU (Hendrycks et al., 2020), and 0-shot TruthfulQA (Lin et al., 2021).

Table 8. Comparison of long-context LLMs with original LLaMA2 and Mistral on the Hugging Face Open LLM benchmark.

Table 10. Ablation study on LongRoPE readjustment for perfor- mance recovery at shorter context lengths.

Table 9. Books3 perplexity comparison of extending LLaMA2- 256k via different secondary positional interpolation methods. Context Window Size Extension 1024k 512k Method

As Table 8 shows, our models achieve comparable results on the original benchmark designed for a smaller context window, and even outperform the original Mistral on Truth- fulQA by +0.5%. LongRoPE-LLaMA2-2048k, fine-tuned at 256k, shows slightly more performance degradation, but remains within reasonable ranges for most tasks.

4.3. Ablation Results

Effectiveness of the second positional interpolation. In our progressive extension strategy, we use our search al- gorithm to conduct a second non-uniform positional inter- polation on the fine-tuned extended LLMs. We validate its effectiveness by running experiments on our fine-tuned LLaMA2-256k model. We extend it to 512k, 1024k, and 2048k using PI and YaRN. As Table 9 shows, our non- uniform positional interpolation sustains a consistent level of perplexity. In contrast, the perplexity under PI and YaRN quickly increases with the extension ratio.

Effectiveness of recovery at shorter context lengths. To mitigate performance loss at shorter context lengths, we readjust the RoPE factors for LongRoPE-2048k via our search algorithm. Specifically, we decrease the maximum allowable scale factors for the search to encourage less in- terpolation at short 4k and 8k lengths. Table 10 shows the perplexity comparison of LongRoPE-LLaMA2-2048k on Proof-pile at 4k and 8k lengths, along with the average LLM benchmark accuracy. The results clearly demonstrate a sig- nificant performance improvement at short context lengths.

Analysis on the two forms of non-uniformities. Finally, we ablate on the two non-uniformities to see how each part contributes to the performance. We setup two experiments: (i) extending LLaMA2-7B to short 16k and 32k using differ- ent methods—PI, searching for RoPE dimension only, and searching for both non-uniformities; (ii) extending our fine- tuned 256k-length LLaMA2 to 2048k following the same procedure. The perplexity is evaluated without fine-tuning. As Table 11 shows, non-uniformity in RoPE dimension sig- nificantly reduces perplexity compared to PI’s linear inter- polation. Non-uniformity in token position clearly improves performance at 16k and 32k lengths but does not show the same impact at 2048k, possibly due to the extremely long length. Preserving only the initial tokens without interpola- tion becomes non-useful, and we leave this as future work.

- Related Works In addition to methods based on position interpolation, this section discusses related works of other approaches.

- etrieval-based approaches use an external memory mod- ule to memorize long past context and retrieval modules for related documents fetching at inference (Tworkowski et al., 2023; Wang et al., 2023; Borgeaud et al., 2022). These designs typically need explicit modifications on the LLM architectures. Our work, in contrast, is more lightweight, with minor positional embedding modifications. We can also handle more long context tasks beyond retrieval, such as long document summarization and few-shot learning.

- Attention-based context window extensions. Beyond po- sitional embedding interpolation, some research achieves input context extension using the original LLM context win- dow length by manipulating attention mechanisms (Han et al., 2023; Xiao et al., 2023; Ratner et al., 2022). The key idea is to mitigate the attention explosion issue caused by new positions using novel attention masks. These efforts and positional interpolation methods are complementary.

- Fine-tuning based approaches focus on how to effectively fine-tune pre-trained LLMs with modified position embed- dings for longer context. Works like Code LLaMA (Rozi`ere et al., 2023), LLaMA2 Long (Xiong et al., 2023) and Scale-

- dRoPE (Liu et al., 2023) choose a very large base value for RoPE and fine-tune on the target length. Our method offers flexibility for various target lengths and can achieve beyond 2M length. More recently, as fine-tuning for long context lengths (i.e., over 128k) demands substantial GPU resources, LongLoRA (Chen et al., 2023b) and PoSE (Zhu et al., 2023) are proposed to mitigate this overhead. Our method is orthogonal to these efficient fine-tuning works.

- Conclusion In this work, we present LongRoPE, a method that remark- ably extends the context length of LLMs to an unprece- dented 2048k, while maintaining their capabilities within original shorter context window. We exploit two forms of non-uniformities in RoPE positional embedding using an efficient evolutionary search. This offers twofold benefits: it provides good initialization for fine-tuning and enables an 8× context window extension without fine-tuning. Building on this, we propose a progressive extension strategy using 256k-length fine-tuned LLMs to reach a 2048k context win- dow size without extra fine-tuning. Extensive experiments validate the effectiveness of LongRoPE. We envision that our LongRoPE-2048k models will enable many new long context applications and inspire further research.

Broader Impacts

This paper presents work whose goal is to advance the field of Machine Learning. There are many potential societal consequences of our work, none which we feel must be specifically highlighted here.